publications

2025

-

Unifying Causal Representation Learning with the Invariance PrincipleDingling Yao, Dario Rancati , Riccardo Cadei , and 2 more authorsInternational Conference on Learning Representations (ICLR 2025), 2025

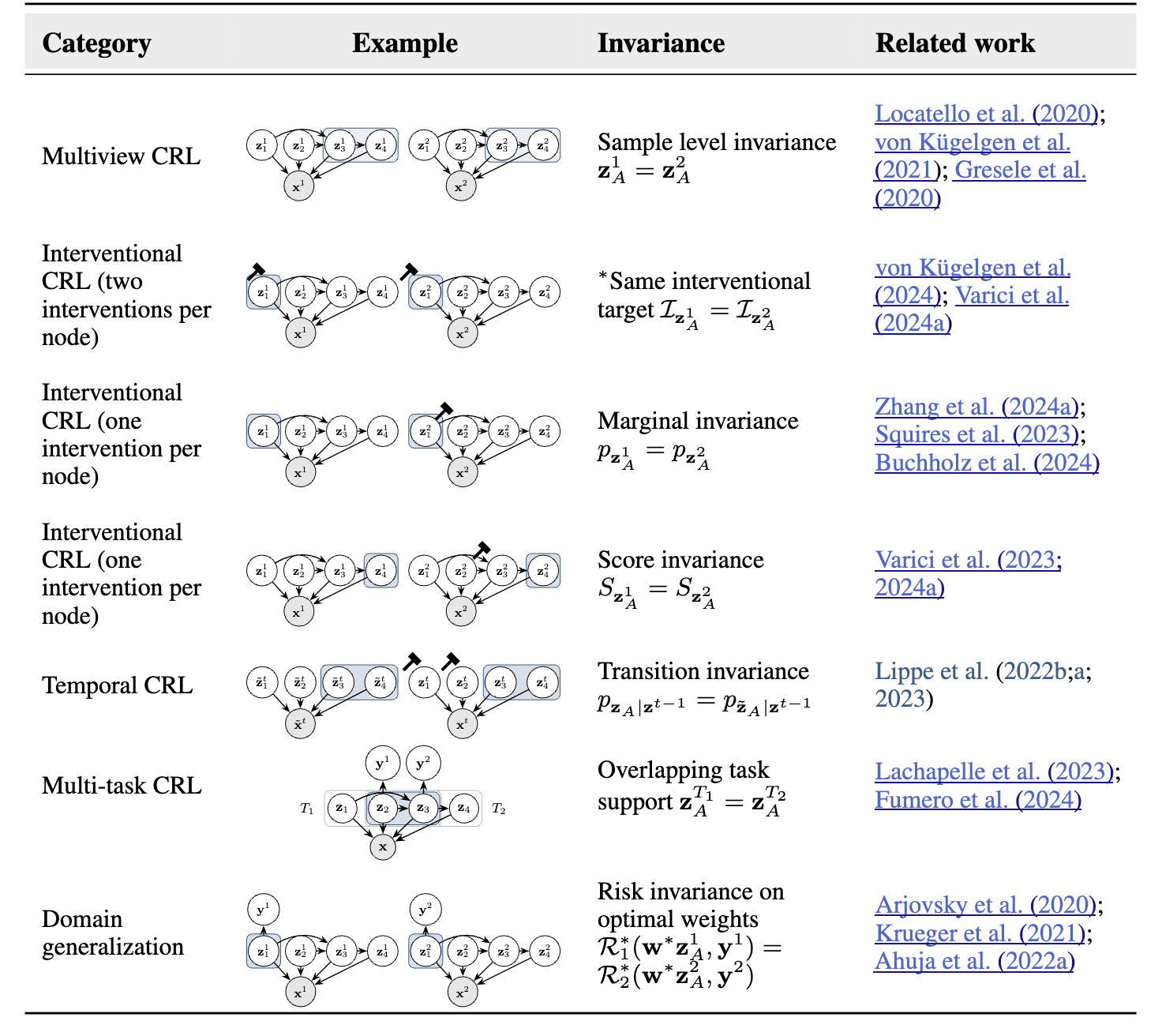

Unifying Causal Representation Learning with the Invariance PrincipleDingling Yao, Dario Rancati , Riccardo Cadei , and 2 more authorsInternational Conference on Learning Representations (ICLR 2025), 2025Causal representation learning (CRL) aims at recovering latent causal variables from high-dimensional observations to solve causal downstream tasks, such as predicting the effect of new interventions or more robust classification. A plethora of methods have been developed, each tackling carefully crafted problem settings that lead to different types of identifiability. These different settings are widely assumed to be important because they are often linked to different rungs of Pearl’s causal hierarchy, even though this correspondence is not always exact. This work shows that instead of strictly conforming to this hierarchical mapping, many causal representation learning approaches methodologically align their representations with inherent data symmetries. Identification of causal variables is guided by invariance principles that are not necessarily causal. This result allows us to unify many existing approaches in a single method that can mix and match different assumptions, including non-causal ones, based on the invariance relevant to the problem at hand. It also significantly benefits applicability, which we demonstrate by improving treatment effect estimation on real-world high-dimensional ecological data. Overall, this paper clarifies the role of causal assumptions in the discovery of causal variables and shifts the focus to preserving data symmetries.

@article{yao2024unifying, title = {Unifying Causal Representation Learning with the Invariance Principle}, author = {Yao, Dingling and Rancati, Dario and Cadei, Riccardo and Fumero, Marco and Locatello, Francesco}, journal = {International Conference on Learning Representations (ICLR 2025)}, year = {2025}, } -

Scalable Mechanistic Neural NetworksJiale Chen , Dingling Yao, Adeel Pervez , and 2 more authorsIn , 2025

Scalable Mechanistic Neural NetworksJiale Chen , Dingling Yao, Adeel Pervez , and 2 more authorsIn , 2025We propose Scalable Mechanistic Neural Network (S-MNN), an enhanced neural network framework designed for scientific machine learning applications involving long temporal sequences. By reformulating the original Mechanistic Neural Network (MNN) (Pervez et al., 2024), we reduce the computational time and space complexities from cubic and quadratic with respect to the sequence length, respectively, to linear. This significant improvement enables efficient modeling of long-term dynamics without sacrificing accuracy or interpretability. Extensive experiments demonstrate that S-MNN matches the original MNN in precision while substantially reducing computational resources. Consequently, S-MNN can drop-in replace the original MNN in applications, providing a practical and efficient tool for integrating mechanistic bottlenecks into neural network models of complex dynamical systems.

@inproceedings{chen2024scalable, title = {Scalable Mechanistic Neural Networks}, author = {Chen, Jiale and Yao, Dingling and Pervez, Adeel and Alistarh, Dan and Locatello, Francesco}, journal = {International Conference on Learning Representations (ICLR 2025)}, year = {2025}, } -

Propagating Model Uncertainty through Filtering-based Probabilistic Numerical ODE SolversDingling Yao, Filip Tronarp , and Nathanael BoschIn International Conference on Probabilistic Numerics 2025 (ProbNum 2025, oral) , 2025

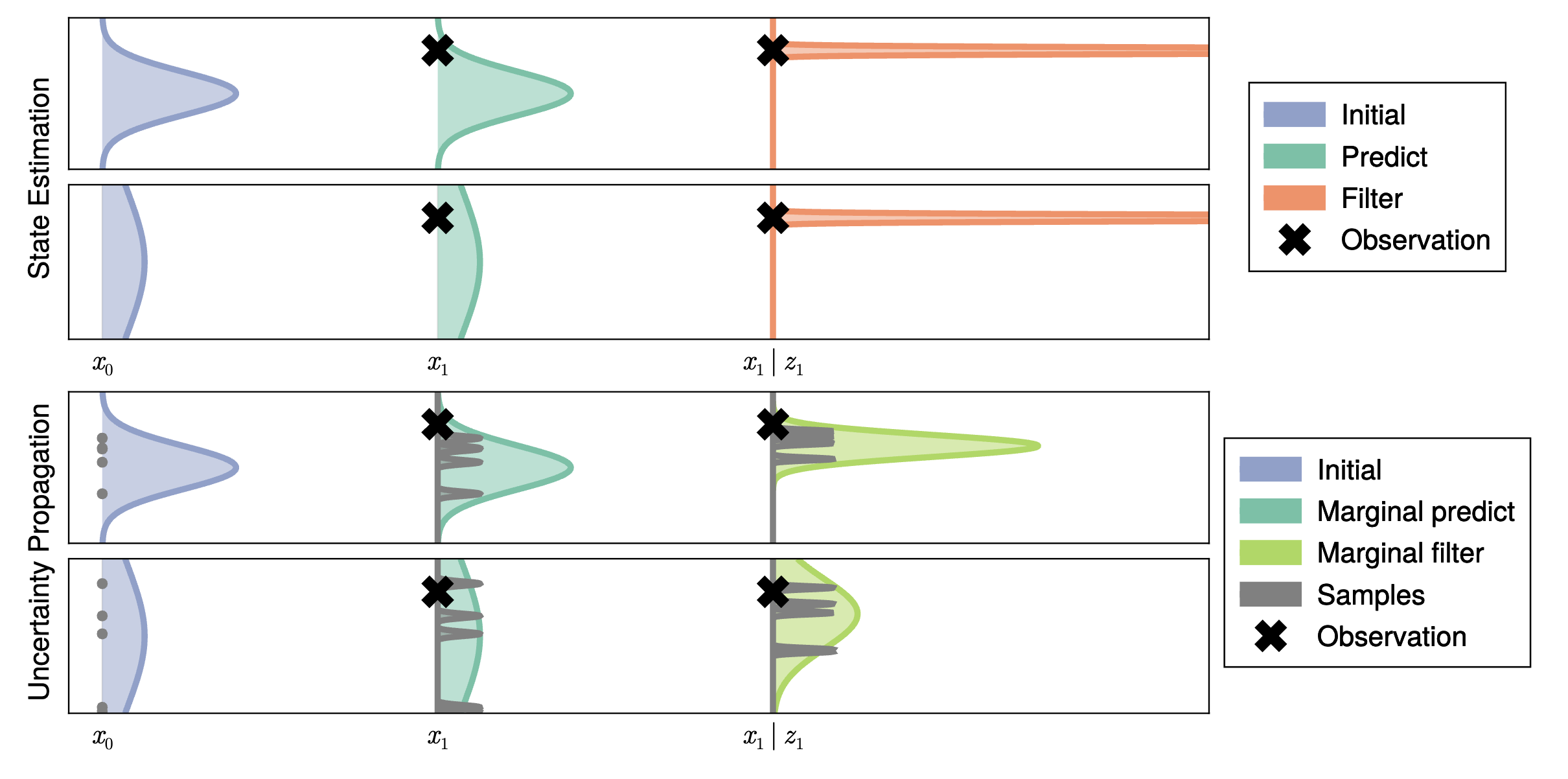

Propagating Model Uncertainty through Filtering-based Probabilistic Numerical ODE SolversDingling Yao, Filip Tronarp , and Nathanael BoschIn International Conference on Probabilistic Numerics 2025 (ProbNum 2025, oral) , 2025Filtering-based probabilistic numerical solvers for ordinary differential equations (ODEs), also known as ODE filters, have been established as efficient methods for quantifying numerical uncertainty in the solution of ODEs. In practical applications, however, the underlying dynamical system often contains uncertain parameters, requiring the propagation of this model uncertainty to the ODE solution. In this paper, we demonstrate that ODE filters, despite their probabilistic nature, do not automatically solve this uncertainty propagation problem. To address this limitation, we present a novel approach that combines ODE filters with numerical quadrature to properly marginalize over uncertain parameters, while accounting for both parameter uncertainty and numerical solver uncertainty. Experiments across multiple dynamical systems demonstrate that the resulting uncertainty estimates closely match reference solutions. Notably, we show how the numerical uncertainty from the ODE solver can help prevent overconfidence in the propagated uncertainty estimates, especially when using larger step sizes. Our results illustrate that probabilistic numerical methods can effectively quantify both numerical and parametric uncertainty in dynamical systems.

@inproceedings{yao2025propagating, title = {Propagating Model Uncertainty through Filtering-based Probabilistic Numerical ODE Solvers}, author = {Yao, Dingling and Tronarp, Filip and Bosch, Nathanael}, booktitle = {International Conference on Probabilistic Numerics 2025 (ProbNum 2025, oral)}, year = {2025}, }

2024

-

Multi-View Causal Representation Learning with Partial ObservabilityDingling Yao, Danru Xu , Sebastien Lachapelle , and 5 more authorsThe Twelfth International Conference on Learning Representations (ICLR 2024 Spotlight), 2024

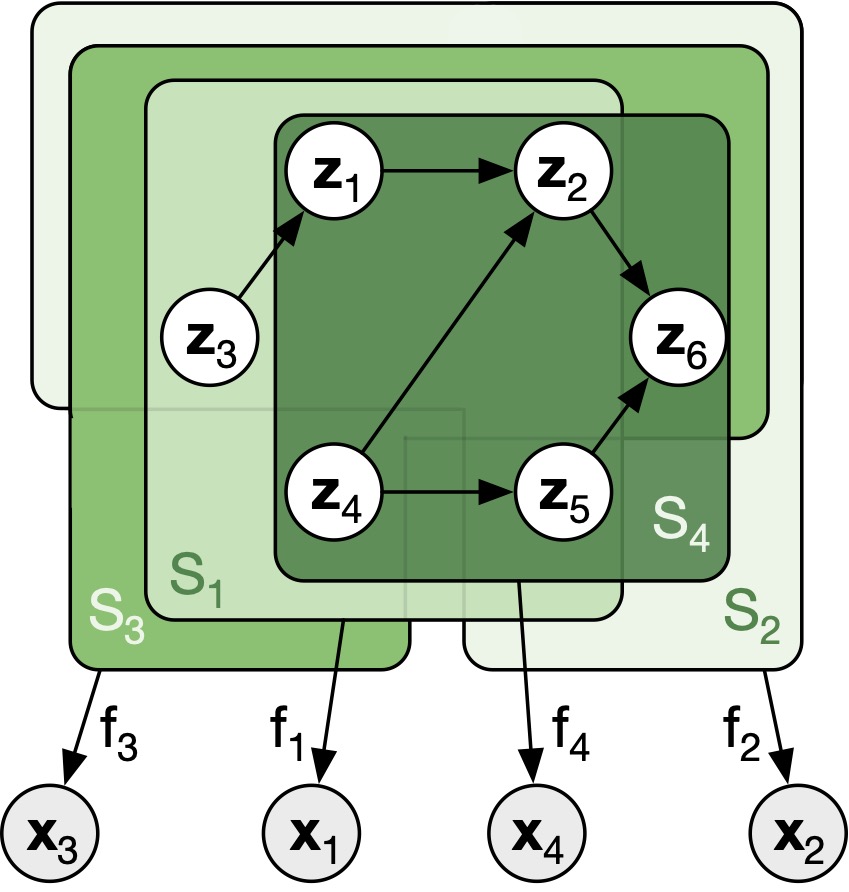

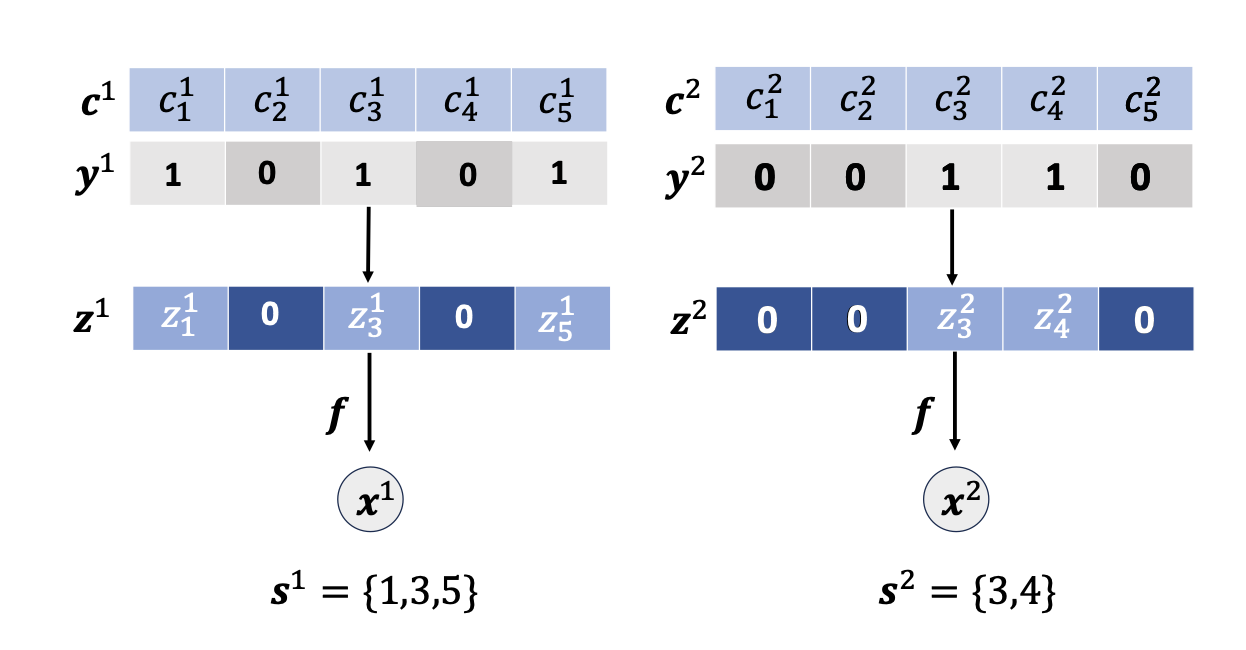

Multi-View Causal Representation Learning with Partial ObservabilityDingling Yao, Danru Xu , Sebastien Lachapelle , and 5 more authorsThe Twelfth International Conference on Learning Representations (ICLR 2024 Spotlight), 2024We present a unified framework for studying the identifiability of representations learned from simultaneously observed views, such as different data modalities. We allow a partially observed setting in which each view constitutes a nonlinear mixture of a subset of underlying latent variables, which can be causally related. We prove that the information shared across all subsets of any number of views can be learned up to a smooth bijection using contrastive learning and a single encoder per view. We also provide graphical criteria indicating which latent variables can be identified through a simple set of rules, which we refer to as identifiability algebra. Our general framework and theoretical results unify and extend several previous works on multi-view nonlinear ICA, disentanglement, and causal representation learning. We experimentally validate our claims on numerical, image, and multi- modal data sets. Further, we demonstrate that the performance of prior methods is recovered in different special cases of our setup. Overall, we find that access to multiple partial views enables us to identify a more fine-grained representation, under the generally milder assumption of partial observability.

@article{yao2024multiview, title = {Multi-View Causal Representation Learning with Partial Observability}, author = {Yao, Dingling and Xu, Danru and Lachapelle, Sebastien and Magliacane, Sara and Taslakian, Perouz and Martius, Georg and von K{\"u}gelgen, Julius and Locatello, Francesco}, journal = {The Twelfth International Conference on Learning Representations (ICLR 2024 Spotlight)}, year = {2024}, } -

A Sparsity Principle for Partially Observable Causal Representation LearningDanru Xu , Dingling Yao, Sebastien Lachapelle , and 4 more authorsInternational Conference on Machine Learning, 2024

A Sparsity Principle for Partially Observable Causal Representation LearningDanru Xu , Dingling Yao, Sebastien Lachapelle , and 4 more authorsInternational Conference on Machine Learning, 2024Causal representation learning (CRL) aims at identifying high-level causal variables from low-level data, e.g. images. Most current methods assume that all causal variables are captured in the high-dimensional observations. The few exceptions assume multiple partial observations of the same state, or focus only on the shared causal representations across multiple domains. In this work, we focus on learning causal representations from data under partial observability, i.e., when some of the causal variables are masked and therefore not captured in the observations, the observations represent different underlying causal states and the set of masked variables changes across the different samples. We introduce two theoretical results for identifying causal variables in this setting by exploiting a sparsity regularizer. For linear mixing functions, we provide a theorem that allows us to identify the causal variables up to permutation and element-wise linear transformations without parametric assumptions on the underlying causal model. For piecewise linear mixing functions, we provide a similar result that allows us to identify Gaussian causal variables up to permutation and element-wise linear transformations. We test our theoretical results on simulated data, showing their effectiveness.

@article{xu2023sparsity, title = {A Sparsity Principle for Partially Observable Causal Representation Learning}, author = {Xu, Danru and Yao, Dingling and Lachapelle, Sebastien and Taslakian, Perouz and von K{\"u}gelgen, Julius and Locatello, Francesco and Magliacane, Sara}, journal = {International Conference on Machine Learning}, year = {2024}, } -

Marrying Causal Representation Learning with Dynamical Systems for ScienceDingling Yao, Caroline Muller , and Francesco LocatelloNeural Information Processing Systems, 2024

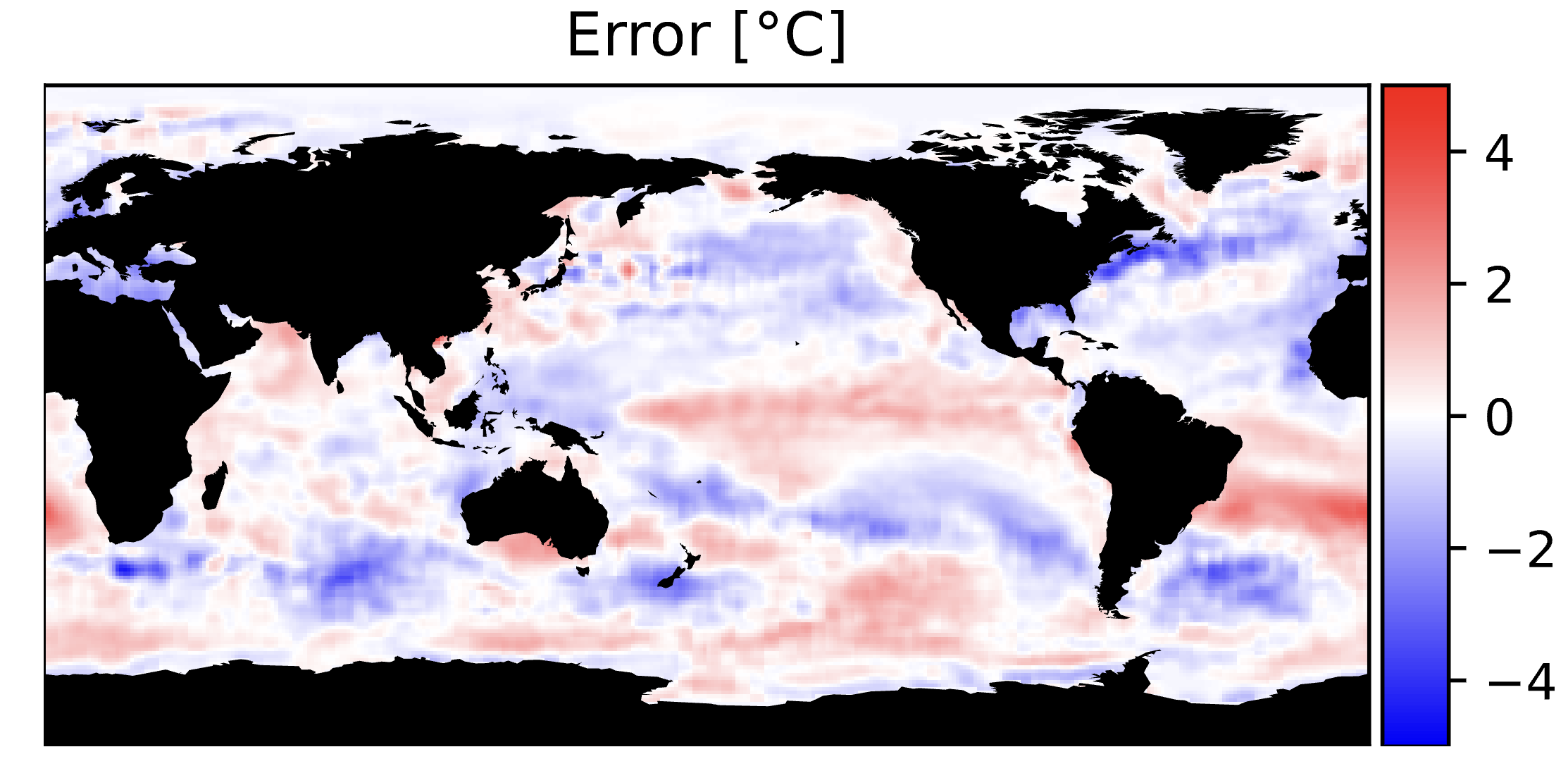

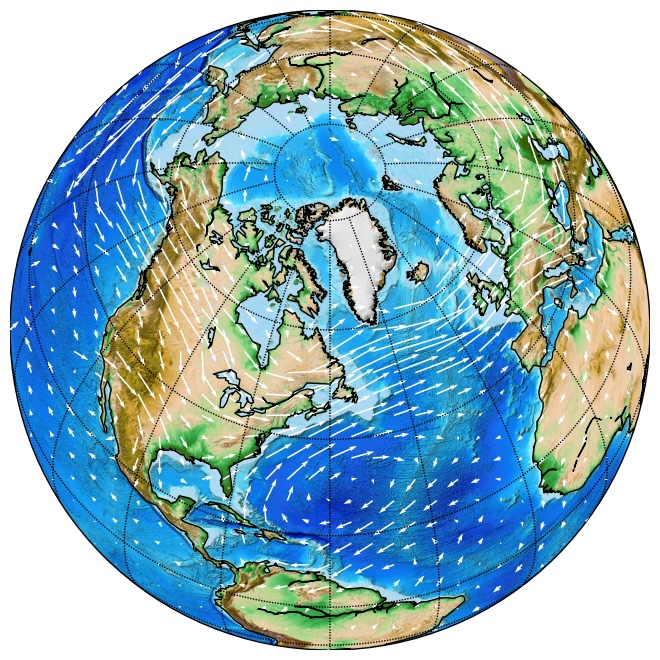

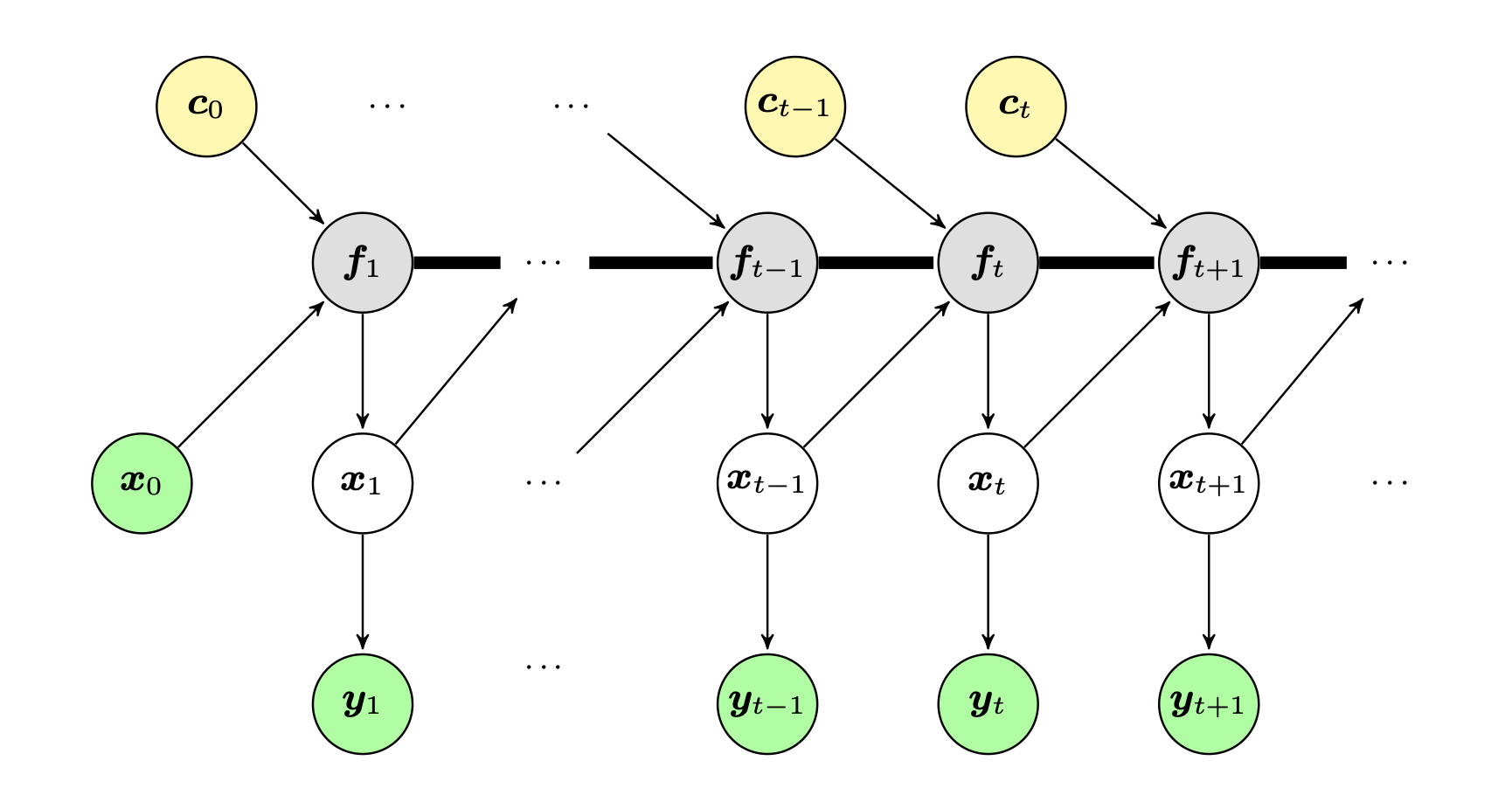

Marrying Causal Representation Learning with Dynamical Systems for ScienceDingling Yao, Caroline Muller , and Francesco LocatelloNeural Information Processing Systems, 2024Causal representation learning promises to extend causal models to hidden causal variables from raw entangled measurements. However, most progress has focused on proving identifiability results in different settings, and we are not aware of any successful real-world application. At the same time, the field of dynamical systems benefited from deep learning and scaled to countless applications but does not allow parameter identification. In this paper, we draw a clear connection between the two and their key assumptions, allowing us to apply identifiable methods developed in causal representation learning to dynamical systems. At the same time, we can leverage scalable differentiable solvers developed for differential equations to build models that are both identifiable and practical. Overall, we learn explicitly controllable models that isolate the trajectory-specific parameters for further downstream tasks such as out-of-distribution classification or treatment effect estimation. We experiment with a wind simulator with partially known factors of variation. We also apply the resulting model to real-world climate data and successfully answer downstream causal questions in line with existing literature on climate change.

@article{yao2024marrying, title = {Marrying Causal Representation Learning with Dynamical Systems for Science}, author = {Yao, Dingling and Muller, Caroline and Locatello, Francesco}, journal = {Neural Information Processing Systems}, year = {2024}, }

2021

-

Active Learning in Gaussian Process State Space ModelHon Sum Alec Yu , Dingling Yao, Christoph Zimmer , and 2 more authorsMachine Learning and Knowledge Discovery in Databases. Research Track: European Conference, ECML PKDD 2021, Bilbao, Spain, September 13–17, 2021, Proceedings, Part III 21, 2021

Active Learning in Gaussian Process State Space ModelHon Sum Alec Yu , Dingling Yao, Christoph Zimmer , and 2 more authorsMachine Learning and Knowledge Discovery in Databases. Research Track: European Conference, ECML PKDD 2021, Bilbao, Spain, September 13–17, 2021, Proceedings, Part III 21, 2021We investigate active learning in Gaussian Process state-space models (GPSSM). Our problem is to actively steer the system through latent states by determining its inputs such that the underlying dynamics can be optimally learned by a GPSSM. In order that the most informative inputs are selected, we employ mutual information as our active learning criterion. In particular, we present two approaches for the approximation of mutual information for the GPSSM given latent states. The proposed approaches are evaluated in several physical systems where we actively learn the underlying non-linear dynamics represented by the state-space model.

@article{yu2021active, title = {Active Learning in Gaussian Process State Space Model}, author = {Yu, Hon Sum Alec and Yao, Dingling and Zimmer, Christoph and Toussaint, Marc and Nguyen-Tuong, Duy}, journal = {Machine Learning and Knowledge Discovery in Databases. Research Track: European Conference, ECML PKDD 2021, Bilbao, Spain, September 13--17, 2021, Proceedings, Part III 21}, pages = {346--361}, year = {2021}, }